A Multiple container deployment with Kubernetes

Single container deployment

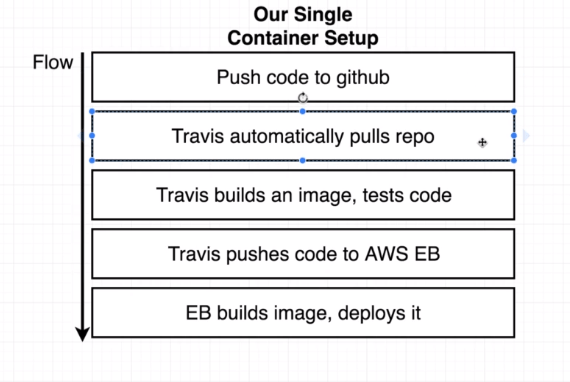

In a previous post on our blog we talked about creating a production-grade workflow to explain the use of Docker Compose. We finished up our single container deployment over to Amazon Elastic Beanstalk.

- The entire application was very simple. It was a single React application backed up by an Nginx server.

- We weren’t relying upon any outside services or databases or anything like that.

- We were building our image multiple times. We built out our image over on Travis Cli when we ran our tests. And we also built the image a second time after we pushed all of our code through Travis over to Amazon Elastic Beanstalk. Maybe that wasn’t the best approach because we were essentially taking our web server or the web application and we were using it to build our image Chances are, we really want our web server to be just concerned with running our web server, and we probably don’t want to have it to have to do this extra process of building out our image.

Our Single Container Setup Flow

Kubernetes: Multiple container deployment

We will now show a multi-container application that makes use of multiple different databases or information sources. Tie it all together with Docker and Docker Compose, and finally deploy to Amazon Beanstalk as a multi-container application.

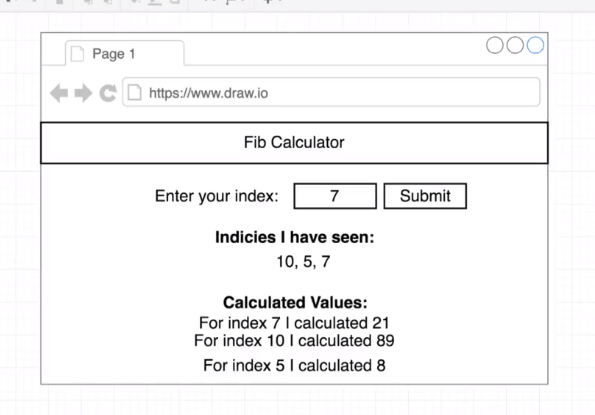

The application uses many different containers or many different services to achieve whatever its goal is. In this case, a fancy Fibonacci calculator.

Code download

Application Architecture

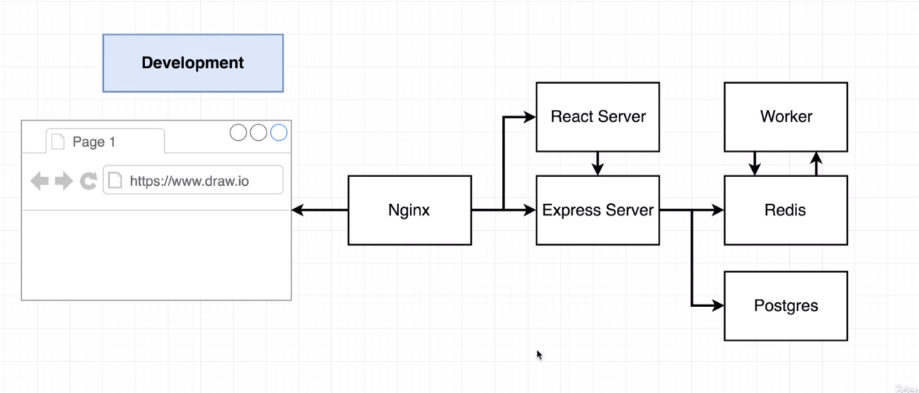

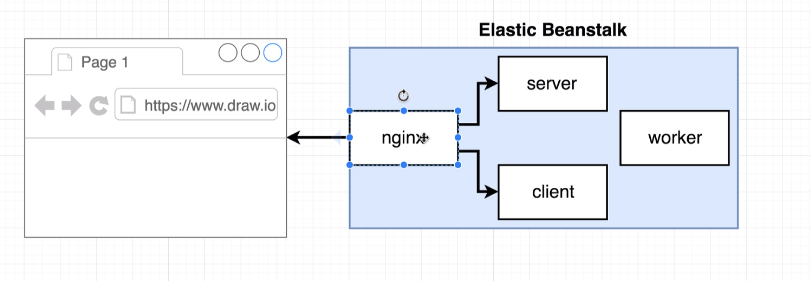

- Ngnix server is going to essentially do some routing. This server is going to decide whether the browser is trying to access a React application or a some back end API. If the browser is trying to access some front end assets like an HTML file or a JavaScript file, it will automatically route the incoming request to a running React server. If the incoming request is instead trying to access some back end API that we are going to use for submitting numbers and reading numbers and retrieving values, all that kind of good stuff. Then Nginx server right here will instead route the request to a express server.

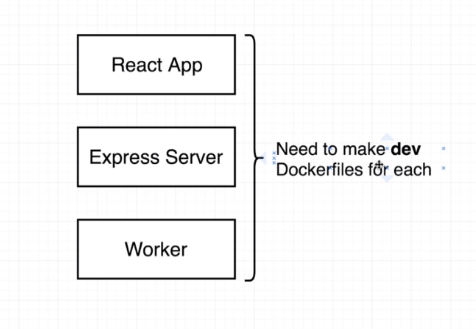

“Dockerizing” Multiple Services

We must create a Dockerfile.dev file for each service.

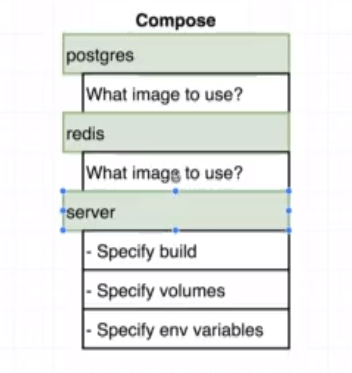

Now that we’ve got Docker files for each of those projects put together that are specifically made for the development environment, we’re going to start putting together a Docker compose file as well, just as we did on the previous application.

We should add Postgress, Redis and Nginx as services too.

Continuous Integraion Workflow for Multiple Images

Having Elastic Beanstalk build our images was probably not a very good approach because we were building everything out far more often than it had to be, and we were also relying upon a running web server to have to download a bunch of dependencies and build an image for us which kind of kept it from doing its primary job of serving web requests.

We’re going to use a slightly different deployment flow, although in general most of these pieces are going to look very similar.

![]()

Multi-Container Deployments to AWS

We are going to use the new Amazon Linux 2 platform.

Amazon Linux 2 Multi Container Docker Deployment:

The new EBS platform will no longer use a Dockerrun.aws.json file for deployment and will instead look for a docker-compose.yml file in the project root.

The docker-compose-dev.yml is going to use in development environment.

docker-compose -f docker-compose-dev.yml up

docker-compose -f docker-compose-dev.yml up --build

docker-compose -f docker-compose-dev.yml down

Create a production only docker-compose.yml file.

A Multiple container deployment with Kubernetes. Google Cloud.

Inside of our aplicattions we have 4 containers: nginx, server, client and worker running at the same exact time. We have a copy of Redis and Postgress as well, but those aren’t really containers, they are outside services.

If we started getting a lot of traffic and we started having a lot of different users starting to enter in different numbers to calculate the Fibonacci sequence for how would we kind of respond to that?

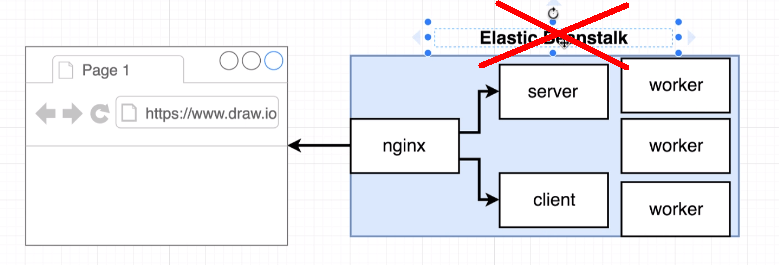

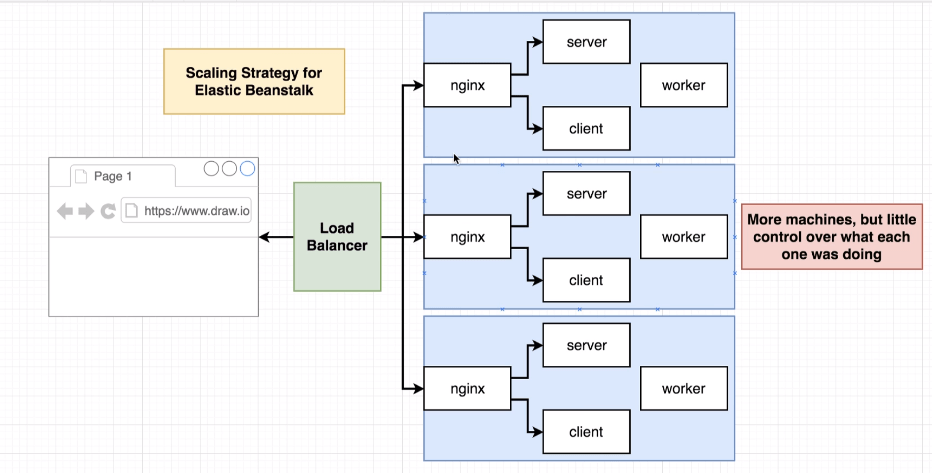

The most critical part of our application is the worker container, in this container is the algorithm that performs all the calculations. If we start to have many users making requests, we need to scale the application by increasing the number of worker containers we have.

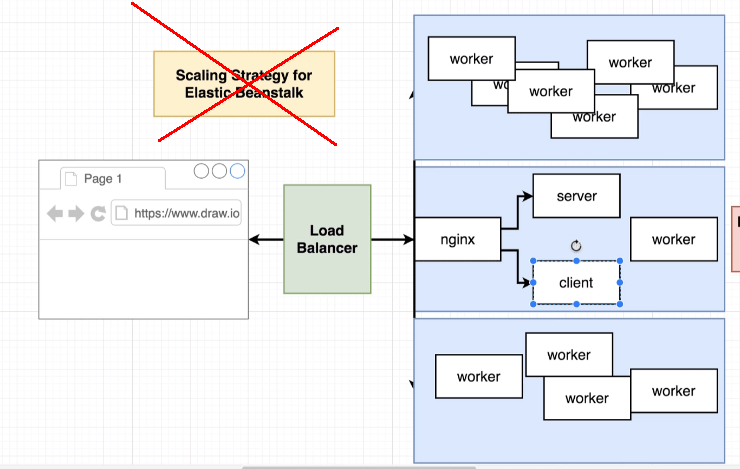

With Elastic Beanstalk achieving this is difficult. What we would get is something like this:

But we need something like this:

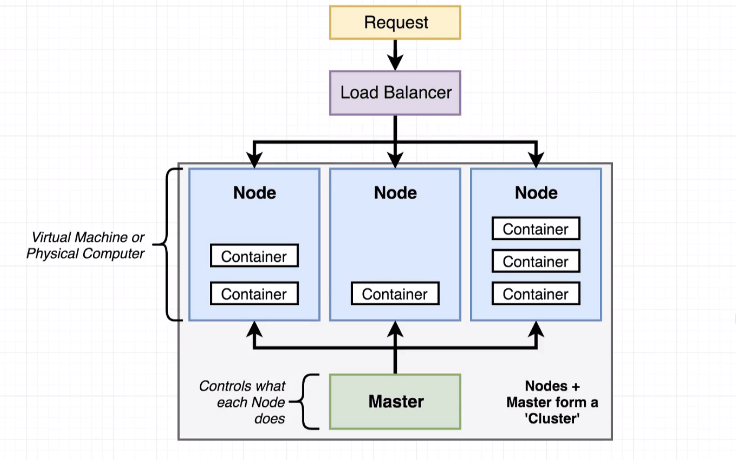

This is something that Kubernetes allows us to do. We will have total control over the existing Virtual Machines or Physical Computer in the Cluster.

- Kubernetes is a system for running many different containers over multiple different machines.

-

When you need to run many different containers with different images we can use Kubernetes.

We have adapted the previously built application and implemented all the necessary code to use Kubernetes. You can see that we have removed the docker-compose files, they are no longer needed in the Kubernetes world

Code download

In this case we use Google Cloud to deploy this Kubernetes application instead of AWS.

I have followed several courses from which I have obtained all this information that I show in my posts. The authors of these courses allege several reasons for choosing Google Cloud, among which are the following:

- Google created Kubernetes

- AWS only “recently” got Kubernetes support

- Excellent documentation for beginners

- In GC it is easier to manage Kubernetes

In my case I have handled Docker and Kubernetes from the command line of a GNU/Linux operating system. Now I am curious how Docker and Kubernetes are managed in a microservices application using Spring Boot and Google Cloud. In the next post we will see this topic.

Leave a Reply

Want to join the discussion?Feel free to contribute!