Creating a Production-Grade Workflow. Docker Compose.

Code download

# Create React app

npx create-react-app frontend

Necesary commands:

# Starts up a development server. For development use only

npm run start

# Run test associated with the project

npm run test

# Builds a production version of the application

npm run build

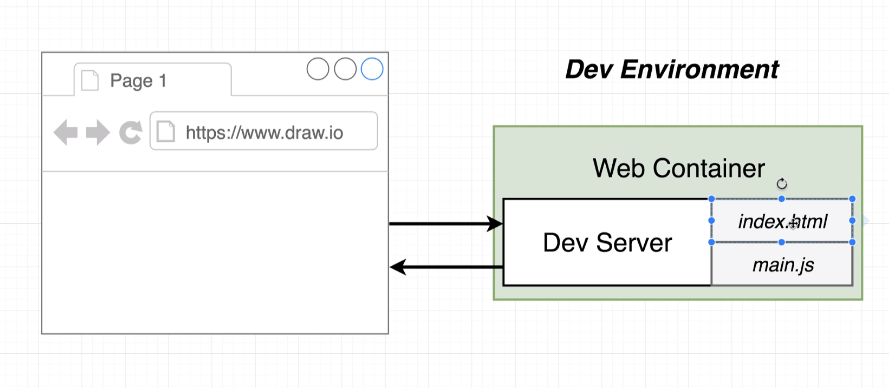

In Development

Dockerfile.dev file

Docker file is only used when we are trying to run our application in a development environment.

docker build -f Dockerfile.dev .

- Duplicating dependencies

When we just installed the Create React app tool and used it to generate a new project, that tool automatically

installed all of our dependencies into our project directory.

In the past we did not install any of our dependencies into our working folder.

Instead, we relied upon our Docker image to install those dependencies when the image was initially

created.

So at present we essentially have two copies of dependencies and we really do not need to.

The easiest solution here is to delete the Node Modules folder inside of our working directory.

- Starting the Container

docker run imageId -p 3000:3000

- Docker Volumes

So if we want to somehow get changes to be reflected inside of our container after we make them, we

need to either rebuild the image or figure out some clever solution.

Well, of course, we probably do not want to rebuild the image every time we make a change to our source

code.

We’re going to come back the next section and figure out a clever solution to make sure that any changes

that we make to our source code get automatically propagated into the container as well.

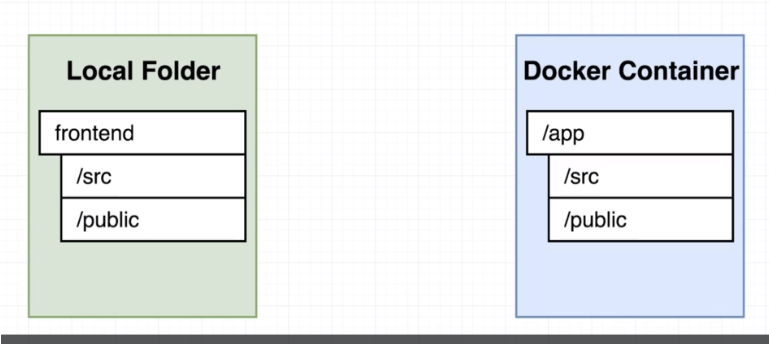

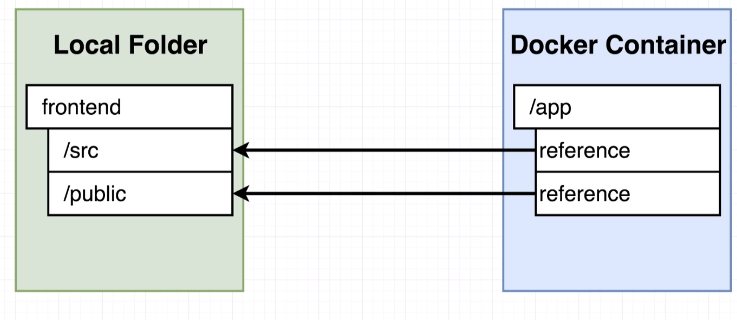

We’re essentially setting up a mapping from a folder inside the container to a folder outside the container.

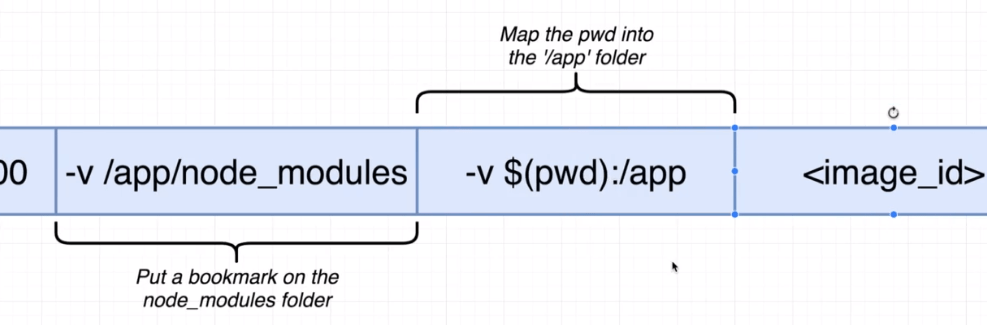

Setting up a Docker volume is sometimes a little bit of a pain in the rear just because of the syntax we have to use when run «Docker run».

Docker run command at our terminal:

docker run -p 3000:3000 -v /app/node_modules -v $(pwd):/app imageId

Aditional flag:

-v /app/node_modules

Don’t try to map it up against anything, because we have previously deleted it to avoid duplication of dependencies

- Docker Compose

So clearly this is kind of a pain right now to run this command long form. And the whole purpose of Docker compose is to make executing Docker run easier. And so even though this time around we have a single container image or simply a single Docker image, we can still make use of Docker compose to dramatically simplify the command, we have to run to start up our Docker container for development purposes.

So let’s create a Docker compose file and inside that file we’re going to encode the port setting and the two volumes that we need to create inside of the container.

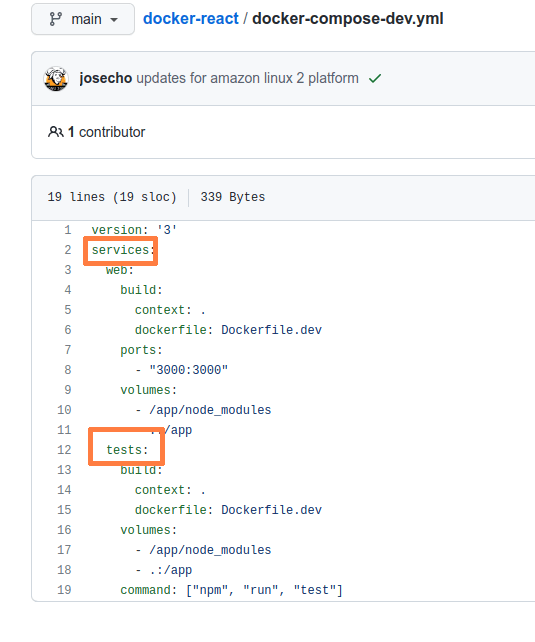

docker-compose-dev.yml file

docker-compose -f docker-compose-dev.yml up

By setting up that volume mount in docker-compone-dev-yml :

| volumes: | |

| – /app/node_modules | |

| – .:/app |

Any time that the Docker container looks into the app folder, it’s essentially going to get a reference back to all these local files we have on our machine.

COPY . .

Executing test

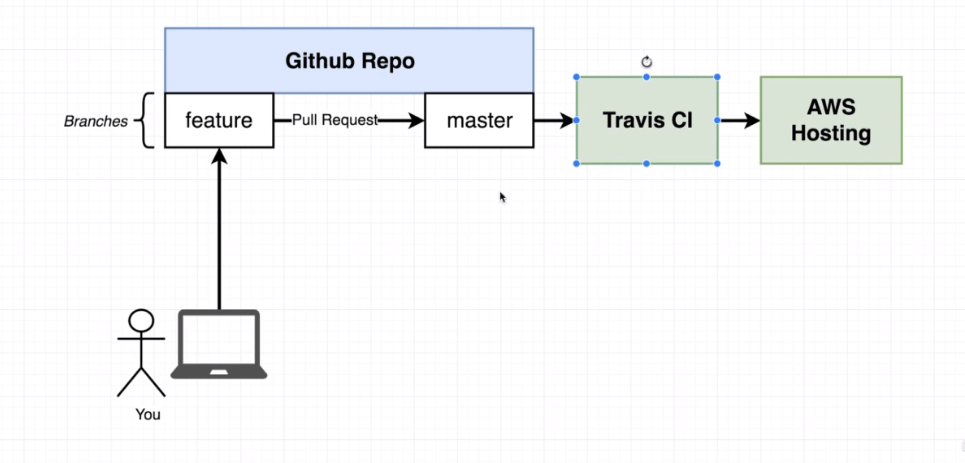

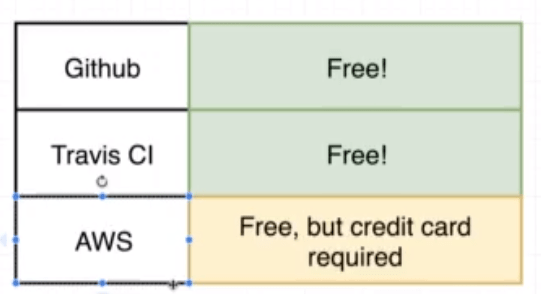

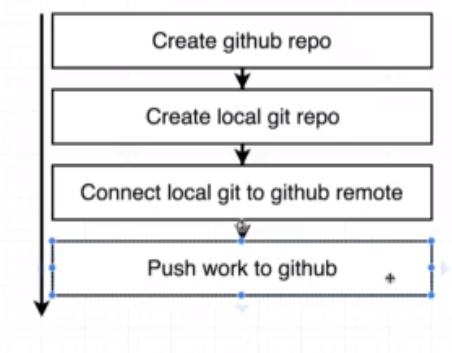

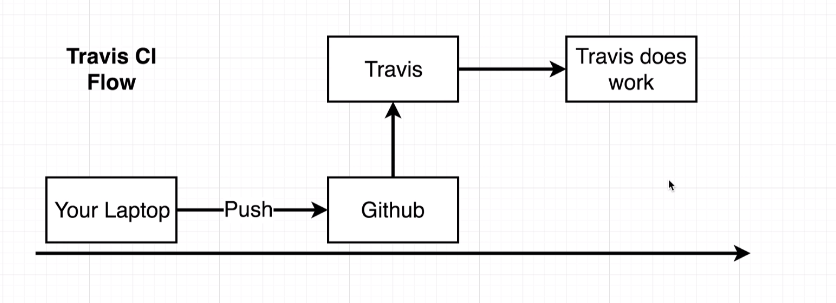

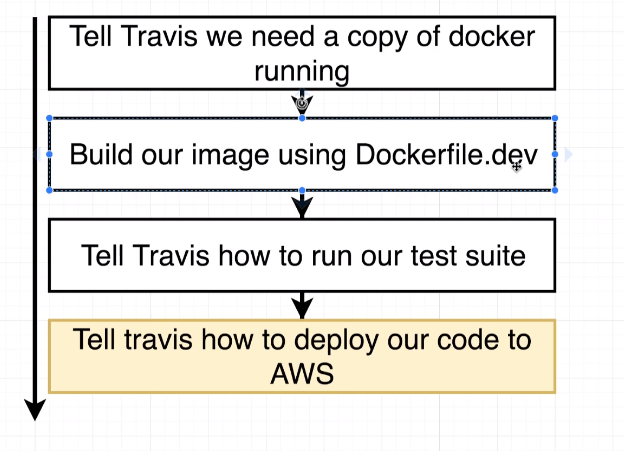

Travis CI: continuous integration service specifically made to run test for your project

docker build -f Dockerfile.dev . docker run imageId npm run test CONTROL + C docker run -it imageId npm run test

You’ll notice that when we add on the flags, we get a much more full screen experience here and now.

We get full interactivity here.

But, again we have the problem that if we make changes to the tests they are not reflected in the container.

Now, we certainly could use a very similar approach of setting up some volumes in the same way that we just did inside of our Docker compose file a moment ago so we could set up a second service inside of here.

We could assign some volumes to it, and the entire purpose of that service would be to run our test suite.

Now, that’s definitely a way that we’re going to go even is not quite a perfect solution.

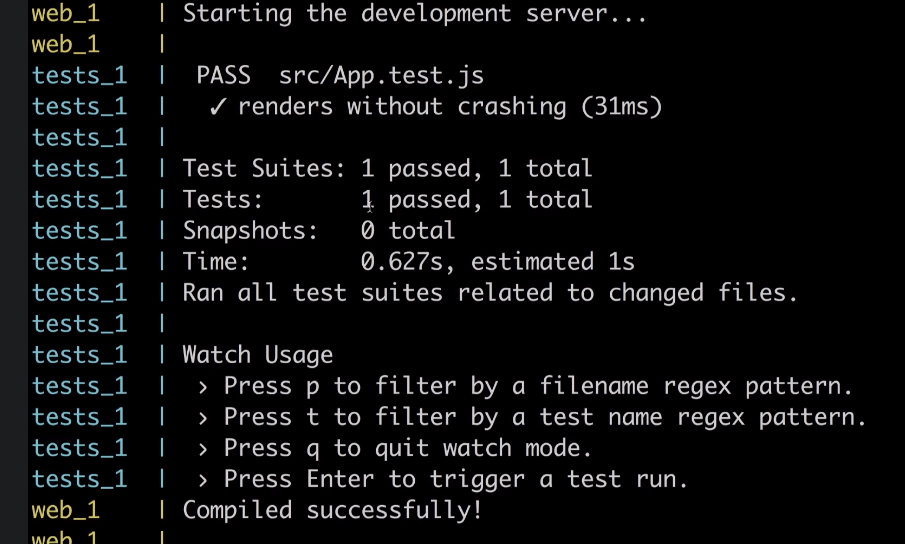

docker-compose up

All right, so again, this definitely works, but there is a little problem with this approach as well.

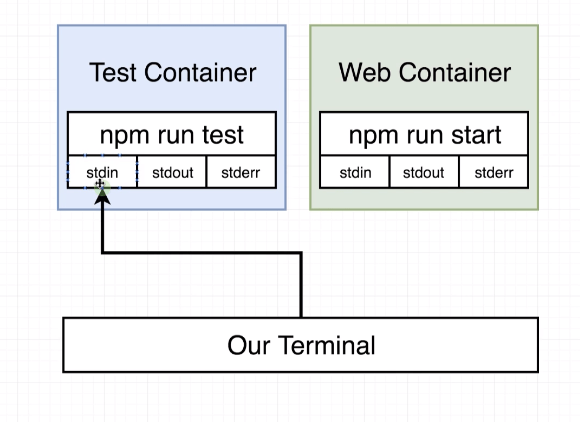

The downside to this approach is that we are getting all the output from our test suite inside of the kind of logging interface of Docker compose. And we don’t have the ability to enter any standard in output to that container. So I can’t hit enter to get the test suite to rerun. I can’t hit W to get any of the options inside the test suite to appear or anything like that.

We are going to open a second terminal window and we are going to get the ID of that running container with Docker.

docker ps

docker attach idTestContainer

We are attaching to the standard in, standard out and standard error of the primary process inside that container.

Unfortunately, this is as good as it gets with the Docker compose solution for running our tests.

When we use Docker compose, we’re not going to be able to be able to manipulate our test suite by entering p,q,t or Enter special commands.

But unfortunately with Docker attach, that is just not an option.

An alternative solution (without creating the service) but we believe that it is not the best would be the following. Open a second terminal:

docker ps docker exec -it containerId npm run test

We get full interactivity here and tests’s changes are recognized.

So this is definitely a solution, but we don’t necessarily think it’s as good as it possibly could be.

It’s going to require you to start up Docker compose (creating test service is not necessary) , then get the idea that running container and run that Docker exec command, which is kind of hard to remember off the top of your head.

Then we have two solutions here. Neither of them are really 100% ideal, but at least you can pick one of the two that you like a little

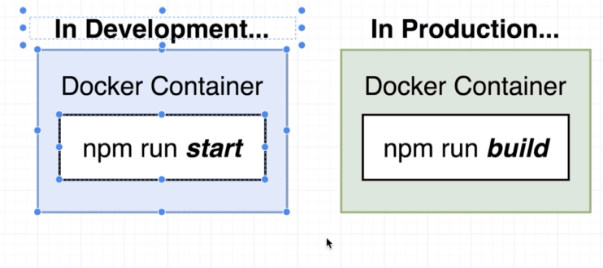

In Development

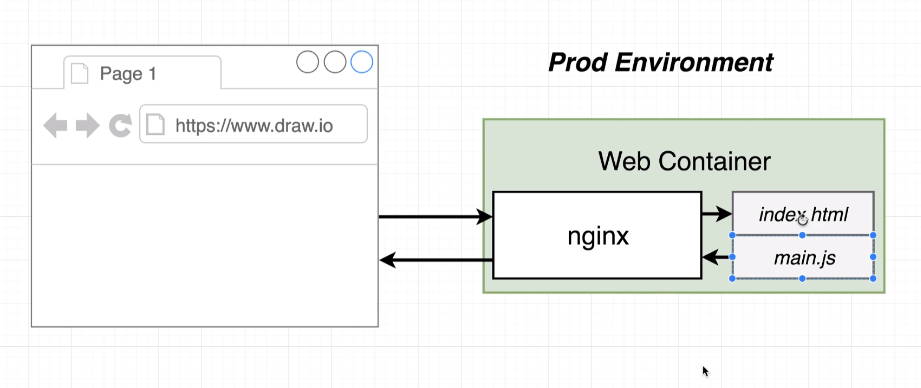

We’re now going to start working on a second Docker file.

This second Docker file is going to make a second image that’s going to run our application specifically in production.

Dockerfile file

Inside this Docker file, we’re going to have two different locks of configuration. We’re going to have one block of configuration to implement something that we’re going to call the build phase and another block of configuration we’re going to call run phase.

npm run build process

docker build . docker run -p 8080:80 containerId

We should be able to open up our browser, navigate to localhost:8080 and see the Welcome to React application up here on the screen.

Dejar un comentario

¿Quieres unirte a la conversación?Siéntete libre de contribuir!